Micros Keyboard for Kitchen Display System Not Reading

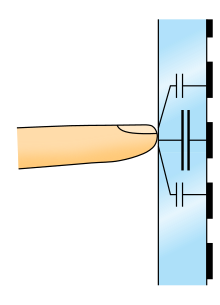

A user operating a touchscreen

A touchscreen or bear upon screen is the assembly of both an input ('touch panel') and output ('display') device. The touch panel is normally layered on the height of an electronic visual display of an data processing system. The display is often an LCD, AMOLED or OLED display while the system is usually a laptop, tablet, or smartphone. A user can give input or control the information processing system through unproblematic or multi-touch gestures by touching the screen with a special stylus or one or more fingers.[i] Some touchscreens utilize ordinary or specially coated gloves to work while others may only piece of work using a special stylus or pen. The user tin use the touchscreen to react to what is displayed and, if the software allows, to control how it is displayed; for instance, zooming to increase the text size.

The touchscreen enables the user to interact directly with what is displayed, rather than using a mouse, touchpad, or other such devices (other than a stylus, which is optional for most mod touchscreens).[2]

Touchscreens are common in devices such as game consoles, personal computers, electronic voting machines, and point-of-sale (POS) systems. They can also be attached to computers or, every bit terminals, to networks. They play a prominent role in the design of digital appliances such as personal digital assistants (PDAs) and some e-readers. Touchscreens are besides important in educational settings such as classrooms or on college campuses.[three]

The popularity of smartphones, tablets, and many types of data appliances is driving the demand and acceptance of common touchscreens for portable and functional electronics. Touchscreens are found in the medical field, heavy manufacture, automated teller machines (ATMs), and kiosks such as museum displays or room automation, where keyboard and mouse systems practise not permit a suitably intuitive, rapid, or authentic interaction by the user with the display'due south content.

Historically, the touchscreen sensor and its accompanying controller-based firmware take been made available past a wide array of after-marketplace system integrators, and not by brandish, scrap, or motherboard manufacturers. Display manufacturers and chip manufacturers have acknowledged the trend toward acceptance of touchscreens every bit a user interface component and accept begun to integrate touchscreens into the key blueprint of their products.

History [edit]

Ane predecessor of the modernistic touch screen includes stylus based systems. In 1946, a patent was filed by Philco Company for a stylus designed for sports telecasting which, when placed confronting an intermediate cathode ray tube display (CRT) would dilate and add to the original signal. Effectively used for temporarily drawing arrows or circles onto a live idiot box broadcast United states 2487641A, Denk, William Eastward, "Electronic pointer for television set images", issued 1949-11-08 . Afterwards inventions congenital upon this system to free telewriting styli from their mechanical bindings. Past transcribing what a user draws onto a computer, it could be saved for future employ Usa 3089918A, Graham, Robert E, "Telewriting apparatus", issued 1963-05-14 .

The kickoff version of a touchscreen which operated independently of the light produced from the screen was patented by AT&T Corporation United states of america 3016421A, Harmon, Leon D, "Electrographic transmitter", issued 1962-01-09 . This touchscreen utilized a matrix of collimated lights shining orthogonally beyond the impact surface. When a beam is interrupted by a stylus, the photodetectors which no longer are receiving a signal tin can be used to determine where the interruption is. Later iterations of matrix based touchscreens built upon this by adding more emitters and detectors to meliorate resolution, pulsing emitters to improve optical signal to noise ratio, and a nonorthogonal matrix to remove shadow readings when using multi-touch.

The first finger driven touch screen was developed by Eric Johnson, of the Royal Radar Institution, located in Malvern, England, described his work on capacitive touchscreens in a short article published in 1965[8] [9] then more than fully—with photographs and diagrams—in an article published in 1967.[10] The application of bear on engineering for air traffic control was described in an article published in 1968.[11] Frank Beck and Bent Stumpe, engineers from CERN (European Organization for Nuclear Research), developed a transparent touchscreen in the early 1970s,[12] based on Stumpe's work at a television manufactory in the early 1960s. Then manufactured by CERN, and shortly after by industry partners,[13] information technology was put to utilize in 1973.[14] In the mid-1960s, another forerunner of touchscreens, an ultrasonic-curtain-based pointing device in front of a terminal brandish, had been developed past a team around Rainer Mallebrein at Telefunken Konstanz for an air traffic control system.[xv] In 1970, this evolved into a device named "Touchinput- Einrichtung " ("impact input facility") for the SIG 50 final utilizing a conductively coated glass screen in front end of the display.[16] [15] This was patented in 1971 and the patent was granted a couple of years later.[16] [fifteen] The same team had already invented and marketed the Rollkugel mouse RKS 100-86 for the SIG 100-86 a couple of years earlier.[16]

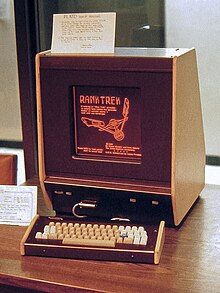

In 1972, a group at the Academy of Illinois filed for a patent on an optical touchscreen[17] that became a standard part of the Magnavox Plato IV Pupil Terminal and thousands were built for this purpose. These touchscreens had a crossed array of 16×16 infrared position sensors, each composed of an LED on one border of the screen and a matched phototransistor on the other edge, all mounted in front of a monochrome plasma display panel. This organization could sense any fingertip-sized opaque object in close proximity to the screen. A like touchscreen was used on the HP-150 starting in 1983. The HP 150 was i of the world's earliest commercial touchscreen computers.[18] HP mounted their infrared transmitters and receivers around the bezel of a 9-inch Sony cathode ray tube (CRT).

In 1977, an American company, Elographics – in partnership with Siemens – began work on developing a transparent implementation of an existing opaque touchpad technology, US patent No. three,911,215, October 7, 1975, which had been developed by Elographics' founder George Samuel Hurst.[19] The resulting resistive technology touch screen was first shown in 1982.[20]

In 1984, Fujitsu released a touch pad for the Micro 16 to conform the complication of kanji characters, which were stored as tiled graphics.[21] In 1985, Sega released the Terebi Oekaki, also known every bit the Sega Graphic Board, for the SG-1000 video game panel and SC-3000 abode estimator. Information technology consisted of a plastic pen and a plastic board with a transparent window where pen presses are detected. It was used primarily with a drawing software awarding.[22] A graphic touch tablet was released for the Sega AI figurer in 1986.[23] [24]

Touch-sensitive control-display units (CDUs) were evaluated for commercial shipping flight decks in the early 1980s. Initial research showed that a touch interface would reduce pilot workload as the crew could then select waypoints, functions and actions, rather than be "head down" typing latitudes, longitudes, and waypoint codes on a keyboard. An effective integration of this engineering science was aimed at helping flight crews maintain a high level of situational awareness of all major aspects of the vehicle operations including the flight path, the operation of diverse shipping systems, and moment-to-moment man interactions.[25]

In the early 1980s, General Motors tasked its Delco Electronics division with a project aimed at replacing an motorcar'southward non-essential functions (i.e. other than throttle, manual, braking, and steering) from mechanical or electro-mechanical systems with solid state alternatives wherever possible. The finished device was dubbed the ECC for "Electronic Command Centre", a digital reckoner and software control organization hardwired to various peripheral sensors, servos, solenoids, antenna and a monochrome CRT touchscreen that functioned both equally display and sole method of input.[26] The ECC replaced the traditional mechanical stereo, fan, heater and air conditioner controls and displays, and was capable of providing very detailed and specific information about the vehicle's cumulative and current operating status in real fourth dimension. The ECC was standard equipment on the 1985–1989 Buick Riviera and later the 1988–1989 Buick Reatta, but was unpopular with consumers—partly due to the technophobia of some traditional Buick customers, but mostly considering of costly technical bug suffered by the ECC's touchscreen which would render climate command or stereo operation impossible.[27]

Multi-touch engineering science began in 1982, when the University of Toronto's Input Inquiry Group developed the first human-input multi-touch organization, using a frosted-glass panel with a camera placed behind the glass. In 1985, the Academy of Toronto grouping, including Neb Buxton, developed a multi-touch tablet that used capacitance rather than beefy camera-based optical sensing systems (see History of multi-impact).

The commencement commercially available graphical indicate-of-sale (POS) software was demonstrated on the 16-bit Atari 520ST color computer. Information technology featured a color touchscreen widget-driven interface.[28] The ViewTouch[29] POS software was starting time shown past its developer, Cistron Mosher, at the Atari Computer demonstration area of the Fall COMDEX expo in 1986.[30]

In 1987, Casio launched the Casio Atomic number 82-thousand pocket estimator with a touchscreen consisting of a 4×four matrix, resulting in 16 touch areas in its small LCD graphic screen.

Touchscreens had a bad reputation of being imprecise until 1988. Almost user-interface books would land that touchscreen selections were limited to targets larger than the boilerplate finger. At the time, selections were done in such a way that a target was selected as soon as the finger came over it, and the corresponding action was performed immediately. Errors were mutual, due to parallax or calibration issues, leading to user frustration. "Lift-off strategy"[31] was introduced by researchers at the University of Maryland Man–Computer Interaction Lab (HCIL). As users bear upon the screen, feedback is provided equally to what will be selected: users can arrange the position of the finger, and the activity takes place only when the finger is lifted off the screen. This immune the selection of small targets, down to a single pixel on a 640×480 Video Graphics Array (VGA) screen (a standard of that fourth dimension).

Sears et al. (1990)[32] gave a review of bookish research on single and multi-touch homo–computer interaction of the time, describing gestures such as rotating knobs, adjusting sliders, and swiping the screen to activate a switch (or a U-shaped gesture for a toggle switch). The HCIL team developed and studied small touchscreen keyboards (including a study that showed users could type at 25 wpm on a touchscreen keyboard), aiding their introduction on mobile devices. They also designed and implemented multi-bear upon gestures such equally selecting a range of a line, connecting objects, and a "tap-click" gesture to select while maintaining location with another finger.

In 1990, HCIL demonstrated a touchscreen slider,[33] which was later cited equally prior fine art in the lock screen patent litigation between Apple and other touchscreen mobile telephone vendors (in relation to U.South. Patent 7,657,849).[34]

In 1991–1992, the Sunday Star7 prototype PDA implemented a touchscreen with inertial scrolling.[35] In 1993, IBM released the IBM Simon the first touchscreen phone.

An early try at a handheld game console with touchscreen controls was Sega's intended successor to the Game Gear, though the device was ultimately shelved and never released due to the expensive price of touchscreen technology in the early 1990s.

The first mobile telephone with a capacitive touchscreen was LG Prada released in May 2007 (which was before the start iPhone).[36]

Touchscreens would not be popularly used for video games until the release of the Nintendo DS in 2004.[37] Until recently,[ when? ] nigh consumer touchscreens could just sense 1 betoken of contact at a time, and few have had the capability to sense how hard one is touching. This has inverse with the commercialization of multi-touch technology, and the Apple Sentinel being released with a strength-sensitive display in April 2015.

In 2007, 93% of touchscreens shipped were resistive and but four% were projected capacitance. In 2013, three% of touchscreens shipped were resistive and 90% were projected capacitance.[38]

Technologies [edit]

In that location are a variety of touchscreen technologies with different methods of sensing bear on.[32]

Resistive [edit]

A resistive touchscreen panel comprises several sparse layers, the most important of which are two transparent electrically resistive layers facing each other with a thin gap between. The peak layer (that which is touched) has a coating on the underside surface; only below information technology is a like resistive layer on top of its substrate. I layer has conductive connections along its sides, the other along top and bottom. A voltage is applied to 1 layer and sensed past the other. When an object, such as a fingertip or stylus tip, presses down onto the outer surface, the two layers touch to become connected at that point.[39] The panel and then behaves equally a pair of voltage dividers, i axis at a time. By rapidly switching between each layer, the position of pressure on the screen tin can be detected.

Resistive touch is used in restaurants, factories and hospitals due to its high tolerance for liquids and contaminants. A major benefit of resistive-touch technology is its low cost. Additionally, as simply sufficient pressure is necessary for the touch to be sensed, they may be used with gloves on, or by using anything rigid as a finger substitute. Disadvantages include the need to press downward, and a adventure of damage by sharp objects. Resistive touchscreens too suffer from poorer dissimilarity, due to having additional reflections (i.due east. glare) from the layers of material placed over the screen.[forty] This is the type of touchscreen that was used by Nintendo in the DS family unit, the 3DS family, and the Wii U GamePad.[41]

Surface acoustic moving ridge [edit]

Surface acoustic wave (SAW) applied science uses ultrasonic waves that pass over the touchscreen panel. When the console is touched, a portion of the wave is captivated. The change in ultrasonic waves is processed past the controller[ disambiguation needed ] to make up one's mind the position of the touch upshot. Surface acoustic wave touchscreen panels tin be damaged past outside elements. Contaminants on the surface can likewise interfere with the functionality of the touchscreen.

SAW devices have a wide range of applications, including delay lines, filters, correlators and DC to DC converters.

Capacitive [edit]

Capacitive touchscreen of a mobile phone

The Casio TC500 Capacitive affect sensor watch from 1983, with angled light exposing the bear upon sensor pads and traces etched onto the top watch glass surface.

A capacitive touchscreen panel consists of an insulator, such as glass, coated with a transparent usher, such as indium tin can oxide (ITO).[42] As the human body is too an electric usher, touching the surface of the screen results in a distortion of the screen's electrostatic field, measurable equally a alter in capacitance. Different technologies may be used to determine the location of the touch on. The location is then sent to the controller for processing. Touchscreens that use argent instead of ITO be, equally ITO causes several ecology bug due to the apply of indium.[43] [44] [45] [46] The controller is typically a complementary metal-oxide-semiconductor (CMOS) application-specific integrated circuit (ASIC) chip, which in turn usually sends the signals to a CMOS digital signal processor (DSP) for processing.[47] [48]

Unlike a resistive touchscreen, some capacitive touchscreens cannot be used to detect a finger through electrically insulating material, such as gloves. This disadvantage peculiarly affects usability in consumer electronics, such every bit touch tablet PCs and capacitive smartphones in cold weather when people may be wearing gloves. It tin can exist overcome with a special capacitive stylus, or a special-application glove with an embroidered patch of conductive thread assuasive electrical contact with the user'due south fingertip.

A low-quality switching-fashion ability supply unit with an accordingly unstable, noisy voltage may temporarily interfere with the precision, accuracy and sensitivity of capacitive touch screens.[49] [50] [51]

Some capacitive brandish manufacturers keep to develop thinner and more accurate touchscreens. Those for mobile devices are at present being produced with 'in-cell' applied science, such equally in Samsung's Super AMOLED screens, that eliminates a layer by edifice the capacitors inside the display itself. This type of touchscreen reduces the visible altitude between the user's finger and what the user is touching on the screen, reducing the thickness and weight of the display, which is desirable in smartphones.

A uncomplicated parallel-plate capacitor has two conductors separated by a dielectric layer. About of the energy in this system is full-bodied direct between the plates. Some of the free energy spills over into the area outside the plates, and the electric field lines associated with this effect are called fringing fields. Part of the challenge of making a practical capacitive sensor is to blueprint a set of printed excursion traces which direct fringing fields into an agile sensing surface area accessible to a user. A parallel-plate capacitor is not a proficient selection for such a sensor pattern. Placing a finger near fringing electric fields adds conductive surface area to the capacitive system. The additional charge storage capacity added past the finger is known every bit finger capacitance, or CF. The capacitance of the sensor without a finger nowadays is known as parasitic capacitance, or CP.

Surface capacitance [edit]

In this basic technology, but one side of the insulator is coated with a conductive layer. A small-scale voltage is practical to the layer, resulting in a uniform electrostatic field. When a conductor, such as a man finger, touches the uncoated surface, a capacitor is dynamically formed. The sensor'due south controller tin determine the location of the bear on indirectly from the change in the capacitance every bit measured from the iv corners of the panel. Equally it has no moving parts, it is moderately durable merely has limited resolution, is prone to false signals from parasitic capacitive coupling, and needs calibration during manufacture. It is therefore virtually frequently used in simple applications such as industrial controls and kiosks.[52]

Although some standard capacitance detection methods are projective, in the sense that they tin can be used to notice a finger through a non-conductive surface, they are very sensitive to fluctuations in temperature, which expand or contract the sensing plates, causing fluctuations in the capacitance of these plates.[53] These fluctuations result in a lot of groundwork noise, so a strong finger signal is required for accurate detection. This limits applications to those where the finger directly touches the sensing element or is sensed through a relatively thin not-conductive surface.

Projected capacitance [edit]

Dorsum side of a Multitouch Earth, based on projected capacitive touch (Percentage) technology

8 x eight projected capacitance touchscreen manufactured using 25 micron insulation coated copper wire embedded in a articulate polyester motion picture.

This diagram shows how viii inputs to a lattice touchscreen or keypad creates 28 unique intersections, every bit opposed to xvi intersections created using a standard x/y multiplexed touchscreen .

Schema of projected-capacitive touchscreen

Projected capacitive impact (PCT; too PCAP) technology is a variant of capacitive affect technology but where sensitivity to touch, accuracy, resolution and speed of touch on have been greatly improved by the use of a uncomplicated form of "Artificial Intelligence". This intelligent processing enables finger sensing to exist projected, accurately and reliably, through very thick glass and fifty-fifty double glazing.[54]

Some modern Percentage touch screens are composed of thousands of discrete keys,[55] simply virtually PCT bear upon screens are made of an 10/y matrix of rows and columns of conductive cloth, layered on sheets of glass. This tin can be washed either past etching a single conductive layer to form a filigree blueprint of electrodes, by etching two split up, perpendicular layers of conductive material with parallel lines or tracks to form a grid, or past forming an ten/y grid of fine, insulation coated wires in a single layer . The number of fingers that tin can exist detected simultaneously is determined past the number of cantankerous-over points (x * y) . Yet, the number of cantankerous-over points can be almost doubled by using a diagonal lattice layout, where, instead of 10 elements simply ever crossing y elements, each conductive element crosses every other chemical element .[56]

The conductive layer is often transparent, being made of Indium tin can oxide (ITO), a transparent electric conductor. In some designs, voltage applied to this grid creates a uniform electrostatic field, which can be measured. When a conductive object, such as a finger, comes into contact with a Percent panel, information technology distorts the local electrostatic field at that point. This is measurable as a change in capacitance. If a finger bridges the gap between 2 of the "tracks", the charge field is further interrupted and detected by the controller. The capacitance can be changed and measured at every individual point on the grid. This organisation is able to accurately track touches.[57]

Due to the top layer of a PCT being glass, it is sturdier than less-expensive resistive touch applied science. Dissimilar traditional capacitive bear on technology, information technology is possible for a Per centum system to sense a passive stylus or gloved finger. However, moisture on the surface of the panel, high humidity, or collected dust can interfere with operation. These environmental factors, however, are not a problem with 'fine wire' based touchscreens due to the fact that wire based touchscreens take a much lower 'parasitic' capacitance, and there is greater distance betwixt neighbouring conductors.

There are 2 types of Per centum: common capacitance and self-capacitance.

Mutual capacitance [edit]

This is a mutual PCT arroyo, which makes utilize of the fact that virtually conductive objects are able to hold a accuse if they are very shut together. In mutual capacitive sensors, a capacitor is inherently formed by the row trace and column trace at each intersection of the filigree. A sixteen×14 array, for case, would have 224 independent capacitors. A voltage is applied to the rows or columns. Bringing a finger or conductive stylus shut to the surface of the sensor changes the local electrostatic field, which in turn reduces the mutual capacitance. The capacitance change at every private point on the grid can be measured to accurately decide the touch location by measuring the voltage in the other axis. Mutual capacitance allows multi-impact functioning where multiple fingers, palms or styli tin can be accurately tracked at the aforementioned fourth dimension.

Cocky-capacitance [edit]

Cocky-capacitance sensors can have the same Ten-Y grid every bit common capacitance sensors, but the columns and rows operate independently. With cocky-capacitance, the capacitive load of a finger is measured on each column or row electrode by a current meter, or the change in frequency of an RC oscillator.[58]

A finger may exist detected anywhere along the whole length of a row. If that finger is also detected by a column, and then it can exist assumed that the finger position is at the intersection of this row/cavalcade pair. This allows for the speedy and accurate detection of a unmarried finger, simply it causes some ambiguity if more than one finger is to be detected. [59] Two fingers may have four possible detection positions, merely two of which are true. However, by selectively de-sensitizing any touch-points in contention, alien results are easily eliminated.[60] This enables "Cocky Capacitance" to be used for multi-impact operation.

Alternatively, ambiguity can be avoided by applying a "de-sensitizing" betoken to all only i of the columns .[lx] This leaves simply a short section of whatever row sensitive to affect. Past selecting a sequence of these sections along the row, it is possible to determine the accurate position of multiple fingers along that row. This process tin then be repeated for all the other rows until the whole screen has been scanned.

Self-capacitive touch screen layers are used on mobile phones such as the Sony Xperia Sola,[61] the Samsung Galaxy S4, Galaxy Note three, Galaxy S5, and Milky way Blastoff.

Self capacitance is far more sensitive than common capacitance and is mainly used for unmarried touch, simple gesturing and proximity sensing where the finger does not fifty-fifty have to bear on the glass surface. Mutual capacitance is mainly used for multitouch applications. [62] Many touchscreen manufacturers use both cocky and common capacitance technologies in the same production, thereby combining their private benefits. [63]

Use of stylus on capacitive screens [edit]

Capacitive touchscreens do not necessarily need to be operated by a finger, but until recently the special styli required could be quite expensive to purchase. The cost of this engineering science has fallen greatly in recent years and capacitive styli are now widely bachelor for a nominal charge, and often given away free with mobile accessories. These consist of an electrically conductive shaft with a soft conductive rubber tip, thereby resistively connecting the fingers to the tip of the stylus.

Infrared filigree [edit]

Infrared sensors mounted around the display watch for a user's touchscreen input on this PLATO Five terminal in 1981. The monochromatic plasma brandish'southward characteristic orange glow is illustrated.

An infrared touchscreen uses an array of 10-Y infrared LED and photodetector pairs effectually the edges of the screen to find a disruption in the pattern of LED beams. These LED beams cantankerous each other in vertical and horizontal patterns. This helps the sensors pick upwards the exact location of the touch. A major benefit of such a organisation is that it tin can notice essentially whatsoever opaque object including a finger, gloved finger, stylus or pen. Information technology is by and large used in outdoor applications and POS systems that cannot rely on a conductor (such as a blank finger) to activate the touchscreen. Unlike capacitive touchscreens, infrared touchscreens exercise not crave any patterning on the glass which increases durability and optical clarity of the overall system. Infrared touchscreens are sensitive to dirt and dust that can interfere with the infrared beams, and endure from parallax in curved surfaces and accidental press when the user hovers a finger over the screen while searching for the detail to be selected.

Infrared acrylic projection [edit]

A translucent acrylic sheet is used equally a rear-projection screen to display information. The edges of the acrylic sheet are illuminated past infrared LEDs, and infrared cameras are focused on the back of the sheet. Objects placed on the sheet are detectable past the cameras. When the sheet is touched by the user, the deformation results in leakage of infrared light which peaks at the points of maximum pressure, indicating the user's touch location. Microsoft'south PixelSense tablets use this applied science.

Optical imaging [edit]

Optical touchscreens are a relatively mod development in touchscreen technology, in which two or more image sensors (such every bit CMOS sensors) are placed around the edges (mostly the corners) of the screen. Infrared backlights are placed in the sensor's field of view on the reverse side of the screen. A bear on blocks some lights from the sensors, and the location and size of the touching object can exist calculated (come across visual hull). This technology is growing in popularity due to its scalability, versatility, and affordability for larger touchscreens.

Dispersive signal technology [edit]

Introduced in 2002 by 3M, this system detects a touch by using sensors to measure the piezoelectricity in the glass. Complex algorithms interpret this data and provide the actual location of the touch.[64] The technology is unaffected by dust and other exterior elements, including scratches. Since at that place is no demand for additional elements on screen, it also claims to provide excellent optical clarity. Any object tin be used to generate touch events, including gloved fingers. A downside is that after the initial affect, the system cannot detect a motionless finger. However, for the aforementioned reason, resting objects practise non disrupt touch recognition.

Acoustic pulse recognition [edit]

The cardinal to this engineering is that a touch at any one position on the surface generates a audio wave in the substrate which and so produces a unique combined point every bit measured by iii or more than tiny transducers attached to the edges of the touchscreen. The digitized signal is compared to a list corresponding to every position on the surface, determining the touch location. A moving affect is tracked by rapid repetition of this process. Extraneous and ambient sounds are ignored since they do non match any stored audio profile. The technology differs from other sound-based technologies past using a simple look-upwards method rather than expensive signal-processing hardware. As with the dispersive signal engineering science organization, a motionless finger cannot be detected after the initial bear on. However, for the same reason, the touch recognition is not disrupted by any resting objects. The technology was created by SoundTouch Ltd in the early 2000s, every bit described by the patent family EP1852772, and introduced to the market by Tyco International'southward Elo division in 2006 as Audio-visual Pulse Recognition.[65] The touchscreen used by Elo is made of ordinary glass, giving good durability and optical clarity. The technology normally retains accuracy with scratches and grit on the screen. The engineering is besides well suited to displays that are physically larger.

Construction [edit]

| | This section needs expansion. You tin assist by adding to it. (September 2017) |

There are several principal ways to build a touchscreen. The key goals are to recognize 1 or more than fingers touching a display, to interpret the command that this represents, and to communicate the command to the appropriate application.

In the resistive approach, which used to be the most popular technique, there are typically 4 layers:

- Top polyester-coated layer with a transparent metallic-conductive coating on the bottom.

- Adhesive spacer

- Drinking glass layer coated with a transparent metallic-conductive coating on the pinnacle

- Adhesive layer on the backside of the glass for mounting.

When a user touches the surface, the system records the alter in the electric current that flows through the display.

Dispersive-betoken applied science measures the piezoelectric effect—the voltage generated when mechanical force is applied to a material—that occurs chemically when a strengthened drinking glass substrate is touched.

There are two infrared-based approaches. In one, an array of sensors detects a finger touching or well-nigh touching the display, thereby interrupting infrared light beams projected over the screen. In the other, bottom-mounted infrared cameras tape rut from screen touches.

In each case, the system determines the intended control based on the controls showing on the screen at the fourth dimension and the location of the touch.

Development [edit]

The development of multi-touch screens facilitated the tracking of more than one finger on the screen; thus, operations that require more than 1 finger are possible. These devices likewise let multiple users to interact with the touchscreen simultaneously.

With the growing use of touchscreens, the toll of touchscreen technology is routinely absorbed into the products that incorporate information technology and is nearly eliminated. Touchscreen technology has demonstrated reliability and is plant in airplanes, automobiles, gaming consoles, car control systems, appliances, and handheld display devices including cellphones; the touchscreen market for mobile devices was projected to produce United states of america$5 billion by 2009.[66] [ needs update ]

The ability to accurately bespeak on the screen itself is besides advancing with the emerging graphics tablet-screen hybrids. Polyvinylidene fluoride (PVFD) plays a major role in this innovation due its high piezoelectric properties, which allow the tablet to sense pressure, making such things as digital painting behave more like paper and pencil.[67]

TapSense, announced in Oct 2011, allows touchscreens to distinguish what part of the hand was used for input, such every bit the fingertip, knuckle and fingernail. This could be used in a variety of ways, for example, to re-create and paste, to capitalize messages, to activate different drawing modes, etc.[68] [69]

A real practical integration betwixt television set-images and the functions of a normal modern PC could exist an innovation in the near future: for example "all-live-information" on the cyberspace about a moving picture or the actors on video, a list of other music during a normal video clip of a vocal or news about a person.

Ergonomics and usage [edit]

Touchscreen enable [edit]

For touchscreens to exist effective input devices, users must be able to accurately select targets and avert adventitious option of next targets. The design of touchscreen interfaces should reflect technical capabilities of the arrangement, ergonomics, cognitive psychology and human physiology.

Guidelines for touchscreen designs were first developed in the 2020s, based on early research and actual apply of older systems, typically using infrared grids—which were highly dependent on the size of the user's fingers. These guidelines are less relevant for the bulk of modern touch devices which use capacitive or resistive bear on engineering science.[70] [71]

From the mid-2000s, makers of operating systems for smartphones take promulgated standards, only these vary betwixt manufacturers, and allow for significant variation in size based on technology changes, then are unsuitable from a man factors perspective.[72] [73] [74]

Much more than of import is the accurateness humans take in selecting targets with their finger or a pen stylus. The accuracy of user selection varies by position on the screen: users are near authentic at the heart, less so at the left and right edges, and to the lowest degree accurate at the elevation edge and peculiarly the bottom edge. The R95 accuracy (required radius for 95% target accuracy) varies from vii mm (0.28 in) in the center to 12 mm (0.47 in) in the lower corners.[75] [76] [77] [78] [79] Users are subconsciously aware of this, and take more time to select targets which are smaller or at the edges or corners of the touchscreen.[80]

This user inaccuracy is a result of parallax, visual vigil and the speed of the feedback loop between the eyes and fingers. The precision of the human finger alone is much, much college than this, so when assistive technologies are provided—such as on-screen magnifiers—users can move their finger (once in contact with the screen) with precision every bit small equally 0.1 mm (0.004 in).[81] [ dubious ]

Hand position, digit used and switching [edit]

Users of handheld and portable touchscreen devices concur them in a variety of ways, and routinely change their method of holding and selection to arrange the position and type of input. In that location are four bones types of handheld interaction:

- Belongings at least in part with both hands, borer with a single thumb

- Holding with two hands and tapping with both thumbs

- Property with one mitt, tapping with the finger (or rarely, thumb) of another hand

- Holding the device in i hand, and tapping with the thumb from that aforementioned mitt

Use rates vary widely. While two-thumb tapping is encountered rarely (1–3%) for many general interactions, it is used for 41% of typing interaction.[82]

In addition, devices are oftentimes placed on surfaces (desks or tables) and tablets peculiarly are used in stands. The user may bespeak, select or gesture in these cases with their finger or thumb, and vary use of these methods.[83]

Combined with haptics [edit]

Touchscreens are ofttimes used with haptic response systems. A common example of this technology is the vibratory feedback provided when a button on the touchscreen is tapped. Haptics are used to improve the user'southward experience with touchscreens by providing fake tactile feedback, and can be designed to react immediately, partly countering on-screen response latency. Inquiry from the Academy of Glasgow (Brewster, Chohan, and Chocolate-brown, 2007; and more recently Hogan) demonstrates that touchscreen users reduce input errors (by xx%), increase input speed (by 20%), and lower their cerebral load (by twoscore%) when touchscreens are combined with haptics or tactile feedback. On top of this, a study conducted in 2013 by Boston College explored the furnishings that touchscreens haptic stimulation had on triggering psychological ownership of a product. Their enquiry concluded that a touchscreens ability to incorporate high amounts of haptic involvement resulted in customers feeling more endowment to the products they were designing or buying. The written report also reported that consumers using a touchscreen were willing to accept a higher cost point for the items they were purchasing.[84]

Client Service [edit]

Touchscreen technology has become integrated into many aspects of customer service industry in the 21st century.[85] The restaurant industry is a skilful example of touchscreen implementation into this domain. Chain restaurants such equally Taco Bell,[86] Panera Breadstuff, and McDonald's offer touchscreens as an option when customers are ordering items off the card.[87] While the addition of touchscreens is a development for this industry, customers may cull to bypass the touchscreen and order from a traditional cashier.[86] To accept this a footstep further, a eating house in Bangalore has attempted to completely automate the ordering process. Customers sit down to a table embedded with touchscreens and order off an extensive menu. In one case the gild is placed information technology is sent electronically to the kitchen.[88] These types of touchscreens fit under the Point of Sale (POS) systems mentioned in the lead section.

"Gorilla arm" [edit]

Extended employ of gestural interfaces without the ability of the user to rest their arm is referred to every bit "gorilla arm".[89] It can result in fatigue, and even repetitive stress injury when routinely used in a work setting. Sure early pen-based interfaces required the operator to piece of work in this position for much of the workday.[90] Allowing the user to rest their hand or arm on the input device or a frame around information technology is a solution for this in many contexts. This phenomenon is often cited as an case of movements to exist minimized past proper ergonomic pattern.[ commendation needed ]

Unsupported touchscreens are still fairly common in applications such as ATMs and data kiosks, just are not an issue as the typical user only engages for brief and widely spaced periods.[91]

Fingerprints [edit]

Touchscreens can suffer from the problem of fingerprints on the display. This tin be mitigated by the employ of materials with optical coatings designed to reduce the visible effects of fingerprint oils. Most modern smartphones have oleophobic coatings, which lessen the corporeality of oil rest. Another option is to install a matte-finish anti-glare screen protector, which creates a slightly roughened surface that does not hands retain smudges.

Glove bear upon [edit]

Touchscreens do not work near of the time when the user wears gloves. The thickness of the glove and the material they are made of play a significant part on that and the power of a touchscreen to pick up a touch on.

Run across also [edit]

- Dual-touchscreen

- Pen computing

- Energy harvesting

- Flexible keyboard

- Gestural interface

- Graphics tablet

- Light pen

- Listing of touch-solution manufacturers

- Lock screen

- Tablet reckoner

- Touch switch

- Touchscreen remote command

- Multi-touch

- Omnitouch

- Pointing device gesture

- Sensacell

- SixthSense

- Nintendo DS

References [edit]

- ^ Walker, Geoff (August 2012). "A review of technologies for sensing contact location on the surface of a display: Review of touch technologies". Periodical of the Society for Information Display. 20 (8): 413–440. doi:10.1002/jsid.100. S2CID 40545665.

- ^ "What is a Touch Screen?". www.computerhope.com . Retrieved 2020-09-07 .

- ^ Allvin, Rhian Evans (2014-09-01). "Technology in the Early on Childhood Classroom". YC Young Children. 69 (4): 62. ISSN 1538-6619.

- ^ "The first capacitative touch screens at CERN". CERN Courrier. 31 March 2010. Archived from the original on 4 September 2010. Retrieved 2010-05-25 .

- ^ Bent Stumpe (16 March 1977). "A new principle for x-y bear on system" (PDF). CERN. Retrieved 2010-05-25 .

- ^ Bent Stumpe (6 February 1978). "Experiments to find a manufacturing process for an x-y touch screen" (PDF). CERN. Retrieved 2010-05-25 .

- ^ Beck, Frank; Stumpe, Bent (May 24, 1973). Two devices for operator interaction in the central control of the new CERN accelerator (Study). CERN. CERN-73-06. Retrieved 2017-09-14 .

- ^ Johnson, E.A. (1965). "Touch Brandish - A novel input/output device for computers". Electronics Letters. i (viii): 219–220. Bibcode:1965ElL.....1..219J. doi:10.1049/el:19650200.

- ^ "1965 - The Touchscreen". Malvern Radar and Applied science History Gild. 2016. Archived from the original on 31 Jan 2018. Retrieved 24 July 2017.

- ^ Johnson, E.A. (1967). "Affect Displays: A Programmed Man-Machine Interface". Ergonomics. ten (2): 271–277. doi:x.1080/00140136708930868.

- ^ Orr, North.W.; Hopkins, V.D. (1968). "The Role of Bear upon Display in Air Traffic Command". The Controller. 7: vii–nine.

- ^ Lowe, J. F. (18 November 1974). "Estimator creates custom control panel". Design News: 54–55.

- ^ Stumpe, Bent; Sutton, Christine (one June 2010). "CERN touch screen". Symmetry Magazine. A articulation Fermilab/SLAC publication. Archived from the original on 2016-xi-sixteen. Retrieved 16 November 2016.

- ^ "Another of CERN's many inventions! - CERN Certificate Server". CERN Document Server . Retrieved 29 July 2015.

- ^ a b c Mallebrein, Rainer (2018-02-18). "Oral History of Rainer Mallebrein" (PDF) (Interview). Interviewed by Steinbach, Günter. Singen am Hohentwiel, Germany: Reckoner History Museum. CHM Ref: X8517.2018. Archived (PDF) from the original on 2021-01-27. Retrieved 2021-08-23 . (18 pages)

- ^ a b c Ebner, Susanne (2018-01-24). "Entwickler aus Singen über dice Anfänge der Computermaus: "Wir waren der Zeit voraus"" [Singen-based developer about the appearance of the computer mouse: "We were alee of time"]. Leben und Wissen. Südkurier (in German language). Konstanz, Germany: Südkurier GmbH. Archived from the original on 2021-03-02. Retrieved 2021-08-22 .

- ^ F. Ebeling, R. Johnson, R. Goldhor, Infrared light axle x-y position encoder for brandish devices, United states 3775560 , granted Nov 27, 1973.

- ^ The H.P. Touch Computer (1983) Archived 2017-08-24 at the Wayback Machine. YouTube (2008-02-xix). Retrieved on 2013-08-16.

- ^ USPTO. "DISCRIMINATING CONTACT SENSOR". Archived from the original on 19 May 2013. Retrieved 6 April 2013.

- ^ "oakridger.com, "G. Samuel Hurst -- the 'Tom Edison' of ORNL", December fourteen 2010". Retrieved 2012-04-xi . [ dead link ]

- ^ Japanese PCs (1984) Archived 2017-07-07 at the Wayback Machine (12:21), Computer Chronicles

- ^ "Terebi Oekaki / Sega Graphic Board - Manufactures - SMS Power!". Archived from the original on 23 July 2015. Retrieved 29 July 2015.

- ^ "Software that takes games seriously". New Scientist. Reed Concern Information. March 26, 1987. p. 34. Archived from the original on January 31, 2018 – via Google Books.

- ^ Applied science Trends: 2d Quarter 1986 Archived 2016-10-15 at the Wayback Machine, Japanese Semiconductor Industry Service - Volume 2: Applied science & Government

- ^ Biferno, Thousand.A., Stanley, D.L. (1983). The Touch-Sensitive Control/Display Unit: A promising Computer Interface. Technical Paper 831532, Aerospace Congress & Exposition, Long Beach, CA: Lodge of Automotive Engineers.

- ^ "1986, Electronics Adult for Lotus Active Suspension Technology - Generations of GM". History.gmheritagecenter.com. Archived from the original on 2013-06-17. Retrieved 2013-01-07 .

- ^ Badal, Jaclyne (2008-06-23). "When Design Goes Bad". Online.wsj.com. Archived from the original on 2016-03-sixteen. Retrieved 2013-01-07 .

- ^ The ViewTouch eatery organisation Archived 2009-09-09 at the Wayback Auto by Giselle Bisson

- ^ "The World Leader in GNU-Linux Eating place POS Software". Viewtouch.com. Archived from the original on 2012-07-17. Retrieved 2013-01-07 .

- ^ "File:Comdex 1986.png". Wikimedia Commons. 2012-09-11. Archived from the original on 2012-12-20. Retrieved 2013-01-07 .

- ^ Potter, R.; Weldon, L.; Shneiderman, B. Improving the accuracy of touch screens: an experimental evaluation of 3 strategies. Proc. of the Conference on Human Factors in Computing Systems, CHI '88. Washington, DC. pp. 27–32. doi:10.1145/57167.57171. Archived from the original on 2015-12-08.

- ^ a b Sears, Andrew; Plaisant, Catherine; Shneiderman, Ben (June 1990). "A new era for high-precision touchscreens". In Hartson, R.; Hix, D. (eds.). Advances in Human-Computer Interaction. Vol. 3. Ablex (1992). ISBN978-0-89391-751-vii. Archived from the original on October 9, 2014.

- ^ "1991 video of the HCIL touchscreen toggle switches (University of Maryland)". YouTube. Archived from the original on 13 March 2016. Retrieved 3 December 2015.

- ^ Apple tree touch-screen patent war comes to the Uk (2011). Event occurs at 1:24 min in video. Archived from the original on 8 December 2015. Retrieved iii December 2015.

- ^ Star7 Demo on YouTube. Retrieved on 2013-08-xvi.

- ^ "The LG KE850: touchable chocolate". Engadget.

- ^ Travis Fahs (Apr 21, 2009). "IGN Presents the History of SEGA". IGN. p. 7. Archived from the original on February iv, 2012. Retrieved 2011-04-27 .

- ^ "Curt Course on Projected Capacitance" (PDF).

- ^ "What is touch screen? - Definition from WhatIs.com". WhatIs.com . Retrieved 2020-09-07 .

- ^ Lancet, Yaara. (2012-07-xix) What Are The Differences Between Capacitive & Resistive Touchscreens? Archived 2013-03-09 at the Wayback Motorcar. Makeuseof.com. Retrieved on 2013-08-16.

- ^ Vlad Savov. "Nintendo 3DS has resistive touchscreen for backwards compatibility, what's the Wii U'south alibi?". Engadget. AOL. Archived from the original on 12 November 2015. Retrieved 29 July 2015.

- ^ Hong, Chan-Hwa; Shin, Jae-Heon; Ju, Byeong-Kwon; Kim, Kyung-Hyun; Park, Nae-Human; Kim, Bo-Sul; Cheong, Woo-Seok (1 November 2013). "Alphabetize-Matched Indium Can Oxide Electrodes for Capacitive Bear on Screen Panel Applications". Journal of Nanoscience and Nanotechnology. 13 (11): 7756–7759. doi:10.1166/jnn.2013.7814. PMID 24245328. S2CID 24281861.

- ^ "Fujifilm reinforces the product facilities for its bear on-panel sensor film "EXCLEAR"". FUJIFILM Europe.

- ^ "Development of a Thin Double-sided Sensor Flick "EXCLEAR" for Bear upon Panels via Silvery Halide Photographic Technology" (PDF). world wide web.fujifilm.com. Retrieved 2019-12-09 .

- ^ "What's behind your smartphone screen? This... |". fujifilm-innovation.tumblr.com.

- ^ "Environment: [Topics2] Development of Materials That Solve Ecology Problems EXCLEAR thin double-sided sensor picture show for touch panels | FUJIFILM Holdings". www.fujifilmholdings.com.

- ^ Kent, Joel (May 2010). "Touchscreen technology nuts & a new development". CMOS Emerging Technologies Conference. CMOS Emerging Technologies Research. six: 1–xiii. ISBN9781927500057.

- ^ Ganapati, Priya (5 March 2010). "Finger Fail: Why Most Touchscreens Miss the Point". Wired. Archived from the original on 2014-05-11. Retrieved 9 November 2019.

- ^ Andi (2014-01-24). "How noise affects touch on screens". West Florida Components . Retrieved 2020-ten-24 .

- ^ "Touch screens and charger noise |". epanorama.cyberspace. 2013-03-12.

- ^ "Aggressively combat noise in capacitive touch applications". EDN.com. 2013-04-08.

- ^ "Delight Bear on! Explore The Evolving World Of Touchscreen Technology". electronicdesign.com. Archived from the original on 2015-12-thirteen. Retrieved 2009-09-02 .

- ^ "formula for human relationship between plate area and capacitance".

- ^ "Bear upon operated keyboard". Archived from the original on 2018-01-31. Retrieved 2018-01-30 .

- ^ "Multipoint touchscreen".

- ^ "Espacenet - Original document". Worldwide.espacenet.com. 2017-04-26. Retrieved 2018-02-22 .

- ^ Noesis base: Multi-touch hardware Archived 2012-02-03 at the Wayback Machine

- ^ "Employ of RC oscillator in touchscreen".

- ^ "Ambiguity caused by multitouch in self capacitance touchscreens" (PDF).

- ^ a b "Multitouch using Self Capacitance".

- ^ "Self-capacitive touch described on official Sony Developers blog". Archived from the original on 2012-03-14. Retrieved 2012-03-14 .

- ^ Du, Li (2016). "Comparison of self capacitance and mutual capacitance" (PDF). arXiv:1612.08227. doi:x.1017/S1743921315010388. S2CID 220453196.

- ^ "Hybrid self and common capacitance touch sensing controllers".

- ^ Beyers, Tim (2008-02-13). "Innovation Serial: Touchscreen Engineering science". The Motley Fool. Archived from the original on 2009-03-24. Retrieved 2009-03-16 .

- ^ "Acoustic Pulse Recognition Touchscreens" (PDF). Elo Touch Systems. 2006: iii. Archived (PDF) from the original on 2011-09-05. Retrieved 2011-09-27 .

- ^ "Bear on Screens in Mobile Devices to Deliver $5 Billion Next Year | Printing Release". ABI Research. 2008-09-10. Archived from the original on 2011-07-07. Retrieved 2009-06-22 .

- ^ "Insights Into PVDF Innovations". Fluorotherm. 17 August 2015. Archived from the original on 15 October 2016.

- ^ "New Screen Applied science, TapSense, Tin can Distinguish Between Different Parts Of Your Hand". Archived from the original on Oct 20, 2011. Retrieved October 19, 2011.

- ^ "TapSense: Enhancing Finger Interaction on Touch Surfaces". Archived from the original on xi Jan 2012. Retrieved 28 January 2012.

- ^ "ANSI/HFES 100-2007 Man Factors Technology of Calculator Workstations". Man Factors & Ergonomics Society. Santa Monica, CA. 2007.

- ^ "Ergonomic Requirements for Office Piece of work with Visual Display Terminals (VDTs)–Part nine: Requirements for Non-keyboard Input Devices". International Organization for Standardization. Geneva, Switzerland. 2000.

- ^ "iOS Human Interface Guidelines". Apple tree. Archived from the original on 2014-08-26. Retrieved 2014-08-24 .

- ^ "Metrics and Grids". Archived from the original on 2014-07-sixteen. Retrieved 2014-08-24 .

- ^ "Touch interactions for Windows". Microsoft. Archived from the original on 2014-08-26. Retrieved 2014-08-24 .

- ^ Hoober, Steven (2013-02-18). "Common Misconceptions About Bear upon". UXmatters. Archived from the original on 2014-08-26. Retrieved 2014-08-24 .

- ^ Hoober, Steven (2013-11-xi). "Design for Fingers and Thumbs Instead of Touch". UXmatters. Archived from the original on 2014-08-26. Retrieved 2014-08-24 .

- ^ Hoober, Steven; Shank, Patti; Boll, Susanne (2014). "Making mLearning Usable: How Nosotros Use Mobile Devices". Santa Rosa, CA.

- ^ Henze, Niels; Rukzio, Enrico; Boll, Susanne (2011). "100,000,000 Taps: Analysis and Improvement of Impact Performance in the Big". Proceedings of the 13th International Conference on Human Computer Interaction with Mobile Devices and Services. New York.

- ^ Parhi, Pekka (2006). "Target Size Written report for 1-Handed Thumb Use on Small-scale Touchscreen Devices". Proceedings of MobileHCI 2006. New York.

- ^ Lee, Seungyons; Zhai, Shumin (2009). "The Performance of Touch on Screen Soft Buttons". Proceedings of the SIGCHI Conference on Homo Factors in Calculating Systems. New York: 309. doi:x.1145/1518701.1518750. ISBN9781605582467. S2CID 2468830.

- ^ Bérard, François (2012). "Measuring the Linear and Rotational User Precision in Touch on Pointing". Proceedings of the 2012 ACM International Briefing on Interactive Tabletops and Surfaces. New York: 183. doi:x.1145/2396636.2396664. ISBN9781450312097. S2CID 15765730.

- ^ Hoober, Steven (2014-09-02). "Insights on Switching, Centering, and Gestures for Touchscreens". UXmatters. Archived from the original on 2014-09-06. Retrieved 2014-08-24 .

- ^ Hoober, Steven (2013-02-18). "How Do Users Actually Hold Mobile Devices?". UXmatters. Archived from the original on 2014-08-26. Retrieved 2014-08-24 .

- ^ Brasel, Southward. Adam; Gips, James (2014). "Tablets, touchscreens, and touchpads: How varying impact interfaces trigger psychological buying and endowment". Periodical of Consumer Psychology. 24 (2): 226–233. doi:10.1016/j.jcps.2013.ten.003.

- ^ Zhu, Ying; Meyer, Jeffrey (September 2017). "Getting in touch with your thinking manner: How touchscreens influence purchase". Journal of Retailing and Consumer Services. 38: 51–58. doi:ten.1016/j.jretconser.2017.05.006.

- ^ a b Hueter, Jackie; Swart, William (February 1998). "An Integrated Labor-Management Organisation for Taco Bell". Interfaces. 28 (1): 75–91. CiteSeerX10.1.ane.565.3872. doi:10.1287/inte.28.1.75. S2CID 18514383.

- ^ Baker, Rosie (19 May 2011). "Food: McDonald's explores digital touchscreens". Marketing Week: 4. Gale A264377887.

- ^ "A Eating place THAT LETS GUESTS Identify ORDERS VIA A TOUCHSCREEN Tabular array (Touche is said to exist the start touchscreen restaurant in India and fifth in the world)". India Business Insight. 31 August 2011. Gale A269135159.

- ^ "gorilla arm". Catb.org. Archived from the original on 2012-01-21. Retrieved 2012-01-04 .

- ^ "Gesture Fatigue ruined light pens forever. Make sure it doesn't ruin your gesture design". Gesture Design Web log. Archived from the original on 2015-02-13. Retrieved 2014-08-23 .

- ^ David Pogue (January three, 2013). "Why Touch Screens Will Non Take Over". Scientific American. 308 (1): 25. doi:10.1038/scientificamerican0113-25. PMID 23342443.

Sources [edit]

- Shneiderman, B. (1991). "Touch screens now offer compelling uses". IEEE Software. 8 (ii): 93–94, 107. doi:x.1109/52.73754. S2CID 14561929.

- Potter, R.; Weldon, 50. & Shneiderman, B. (1988). An experimental evaluation of three strategies. Proc. CHI'88. Washington, DC: ACM Press. pp. 27–32.

- Sears, A.; Plaisant, C. & Shneiderman, B. (1992). "A new era for high precision touchscreens". In Hartson, R. & Hix, D. (eds.). Advances in Human-Estimator Interaction. Vol. 3. Ablex, NJ. pp. 1–33.

- Holzinger, Andreas (2003). "Finger Instead of Mouse: Affect Screens as a Means of Enhancing Universal Access". Universal Admission Theoretical Perspectives, Do, and Experience. Lecture Notes in Information science. Vol. 2615. pp. 387–397. doi:10.1007/3-540-36572-9_30. ISBN978-iii-540-00855-2.

External links [edit]

Source: https://en.wikipedia.org/wiki/Touchscreen

0 Response to "Micros Keyboard for Kitchen Display System Not Reading"

Post a Comment